Assess, Analyze, Adjust: Using Mid-Year Data to Drive Student Growth

By Dr. Natalie Fallert

It is January, and many secondary teachers have just finished their semester finals or are getting ready to give them. For middle school teachers, if you didn’t offer a mid-year content exam, you are probably embarking on some universal screener1 like STAR, i-Ready, AIMSweb, etc. Hopefully, you are using the data from these assessments to inform your future instruction and planning, but if you are not, please consider the benefits this data can provide as you head into the second half of the year.

As a high school English teacher, my semester final closely resembled the state’s end-of-course exam. My final was not a regurgitation of Of Mice and Men facts but instead a selection of cold reads and analytical multiple-choice questions that tested the student’s ability to independently apply reading comprehension and analysis strategies. The results would tell me which students mastered which skills and the skills that most students had not yet mastered. By carefully analyzing answers, I could see patterns where many students missed specific questions. I could also see if they all chose the same answer, which would highlight a “teacher” problem, not a student problem.

Using this data helped me narrow my focus for the second half of the year and make my teaching much more effective and standards-focused.

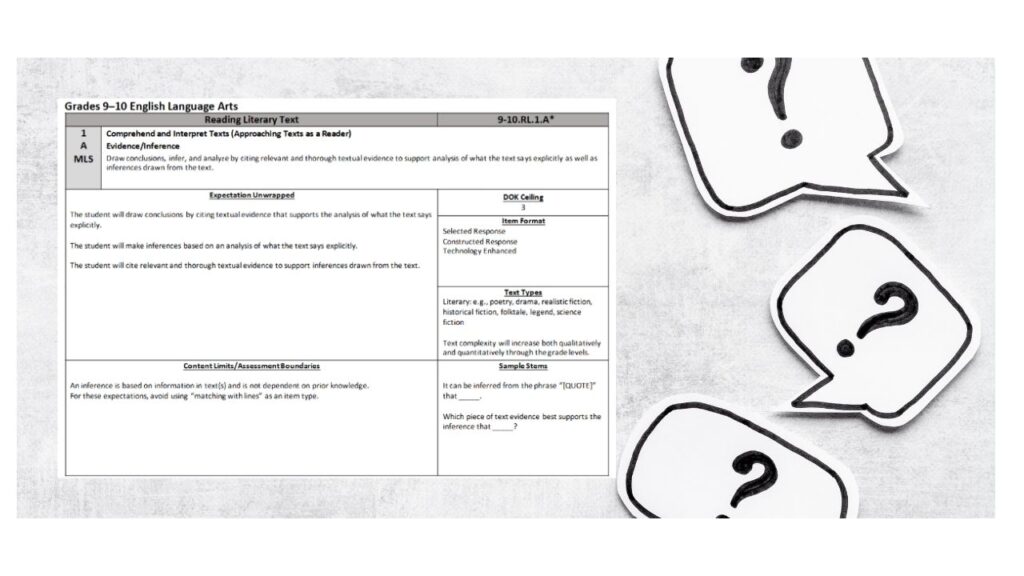

Creating Common Summative Assessments (CSAs)

If you have the privilege or responsibility to create common summative assessments, make sure your questions align with standards. It is also essential to test each question at the highest depth of knowledge as opposed to solely crafting basic recall questions. Consider studying your state assessment to see which standards are most readily assessed and how these questions are worded. Use this information to ensure that your CSAs are following those same patterns. Offer two to three questions that assess the same standard to provide reliable and valid data. Some states, like Missouri, even offer sample question stems for specific standards that can aid in question creation.

Analyzing Assessments

If your CSAs are handed to you, it is still imperative that you truly understand what you are assessing. Spend time analyzing the questions and double-checking that they are correctly aligned to the standards. I have written a ton of district, state, and textbook assessments, and we all make mistakes. Don’t be afraid to second-guess something or ask for clarification from colleagues, department chairs, or supervisors.

Analyzing the Data

Once you have a clear understanding of your assessment, it is time to analyze the students’ scores and data. First, look for patterns. These patterns can be examined by individual students or by each question.

Analyzing Individual Student Data

When looking at a student’s performance, it is essential to see if they missed a certain standard more than others. They might have missed more questions in a particular passage, so their deficit may be in a specific genre, like nonfiction versus fiction. Many tests have two-part questions where one is contingent on the other. Is the student getting the first part correct and missing the second part? Of course, another issue can be that the student is multiple grade levels below in reading, so the text becomes the barrier. Unfortunately, that is an entirely different conversation that is not covered here.

Analyzing Question Data

If looking at questions, teachers should consider three things:

- How the question is worded

- The correct answer and distractor (wrong answer) choices

- How the skill or standard was taught

How a question is worded can confuse or mislead students based on either the language I use in teaching versus the test language or how I teach the skill. I recall a specific question on parallel structure that I knew my students would miss simply because of the wording and answer choices. This was no fault of mine or the students; it was just a simple truth. Yes, I had covered this in class, and my students had a decent grasp on parallelism, but I had taught it more in a grammatical sense and not a structural aspect. Another recent example I noticed when working with a school was the use of the words “appeal to authority” versus “rhetorical devices” or “rhetorical appeals.” This slight nuance can skew results and cause a student or teacher to think they don’t know this skill when, in reality, they just didn’t understand the language.

Distractors can also be an issue on any multiple-choice test. Any slight nuance in wording can bait a student into choosing a particular answer. These are key things to consider when looking at mid-year data. It can help you focus your instructional time in the right area, such as determining if you need to reteach a standard or simply teach kids the nuances of answer choices (aka test-taking strategies).

Analyzing – Diagnostic Assessments2

If students take time off from reading in the summer, it can take months for them to regain their levels of reading comprehension and fluency. By mid-year, you should see these students back to the skill level they had in May. For students who came in on grade level, you will want to see them on that same path so they can end on grade level. Unfortunately, the students who came in below grade level do not get the luxury of those who came in on grade level; they have to cover more ground in less time. Analyzing this data can help a teacher know what is and is not working and adjust their instruction.

Mid-year assessments offer a wealth of information that, when analyzed thoughtfully, can shape the trajectory of your instruction and support student growth. By understanding the data, identifying patterns, and implementing strategic interventions, you empower your students to achieve their goals. Use these tips to make the most of your mid-year check-in, and remember: data is a tool to guide us, not define us. Together, we can turn insights into impact.

Quick Tips To Help

- Give them opportunities to read daily.

- Make sure they are reading within their Zone of Proximal Development3 – the texts they are independently reading are not too easy or too hard for them.

- Create small groups based on standards deficits.

- Provide opportunities for additional practice during intervention time instead of just make-up work.

- Consider setting goals with students to engage them in the learning process.

- Emphasize their value as tools to guide progress, avoiding any language that might cause shame, and foster a growth mindset to inspire confidence and motivation.

- Analyze data and assessment questions in collaborative teams/PLCs.

Use these tips to make the most of your mid-year check-in, and remember: data is a tool to guide us, not define us.

Mid-year assessments offer a wealth of information that, when analyzed thoughtfully, can shape the trajectory of your instruction and support student growth. By collaboratively analyzing mid-year data, teachers can identify patterns, share strategies, and collectively plan interventions that drive student success. Together, we can turn insights into impact.

1 Universal screening is a systematic assessment process conducted with all students to identify those who may be at risk for challenges in academic, behavioral, social-emotional, or readiness skills. It provides essential data to predict learning gaps at individual, classroom, and grade levels, enabling early interventions that support student success both inside and outside the classroom.

2 A diagnostic assessment is a tool used by educators to gather detailed information about a student’s strengths and weaknesses in specific skill areas or behaviors. These assessments, which can be formal (e.g., standardized tests) or informal (e.g., work samples), help identify skill deficits and challenges. By using multiple measures and data, diagnostic assessments enable teachers to provide targeted, individualized, and data-driven instruction or interventions that effectively address learning needs. Some examples are STAR, iReady, or Running Records.3 Zone of Proximal Development is the difference between what a learner an do without help and what they can do with guidance and encouragement from a skilled partner.

Definition References:

Brown, R., & Harris, J. (2021, May 20). The importance of using diagnostic assessment: 4 TIPS for identifying learner needs. Renaissance. https://www.renaissance.com/2021/05/20/blog-the-importance-of-using-diagnostic-assessment-4-tips-for-identifying-learner-needs/

McLeod, S. (2024, August 9). Vygotsky’s zone of Proximal Development. Simply Psychology. https://www.simplypsychology.org/zone-of-proximal-development.html Vanderbilt University. (2025). How will teachers initially identify struggling readers?. IRIS Center. https://iris.peabody.vanderbilt.edu/module/rti02/cresource/q2/p02/